Slide 1 - S2 Perception Core

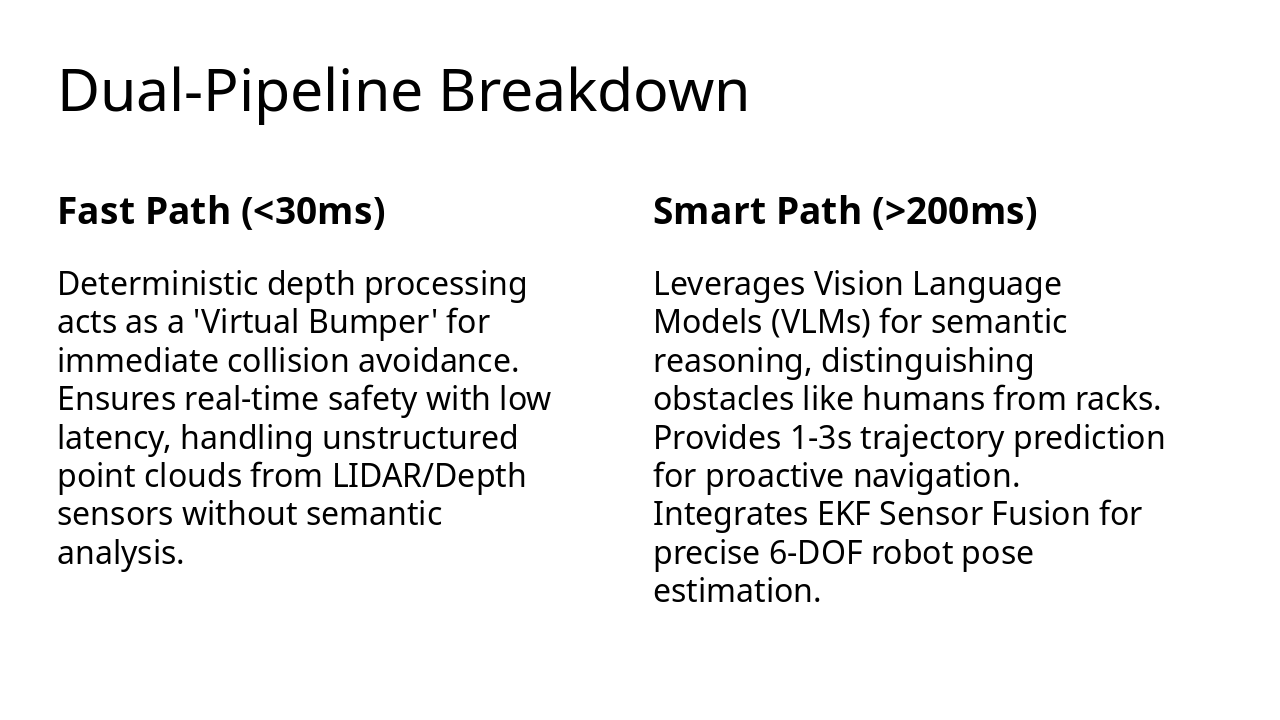

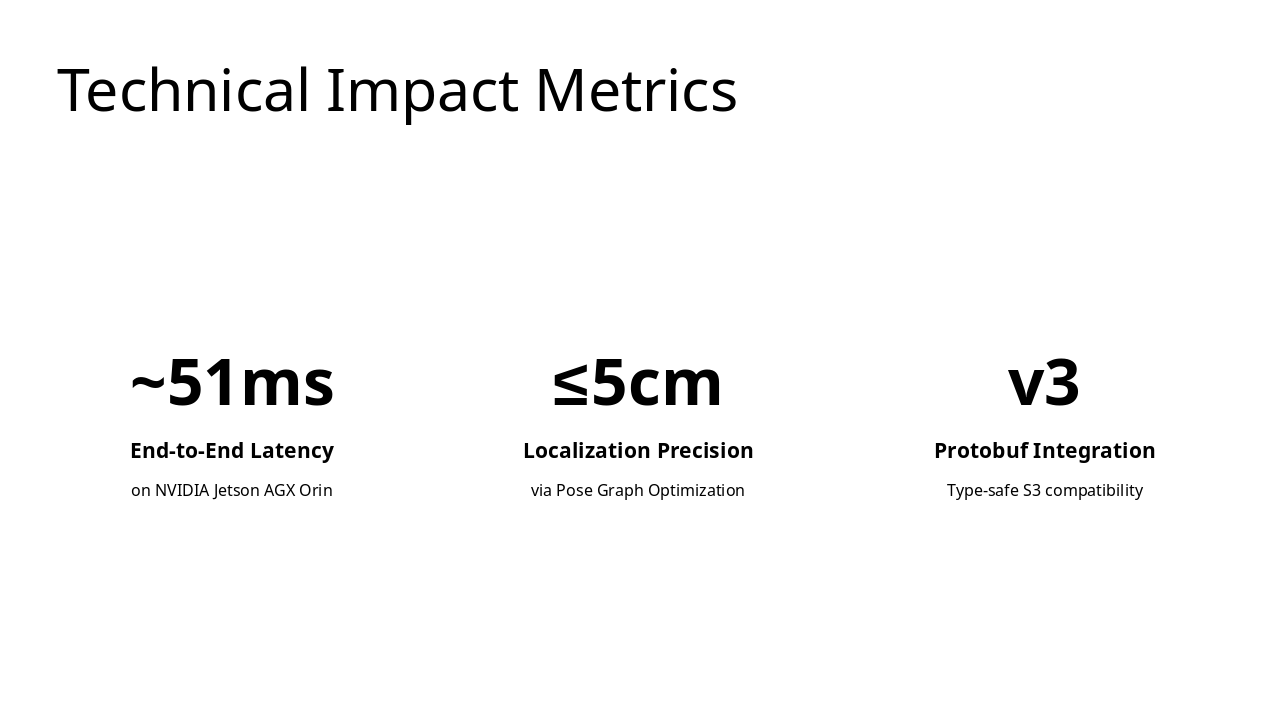

The slide is titled "S2 Perception Core" and serves as a title slide. Its subtitle describes the core's function: transforming raw sensor data into actionable insights using ROS2 and Vision-Language Models (VLMs).

S2 Perception Core

Transforming Raw Sensor Data into Actionable Insight via ROS2 & VLMs