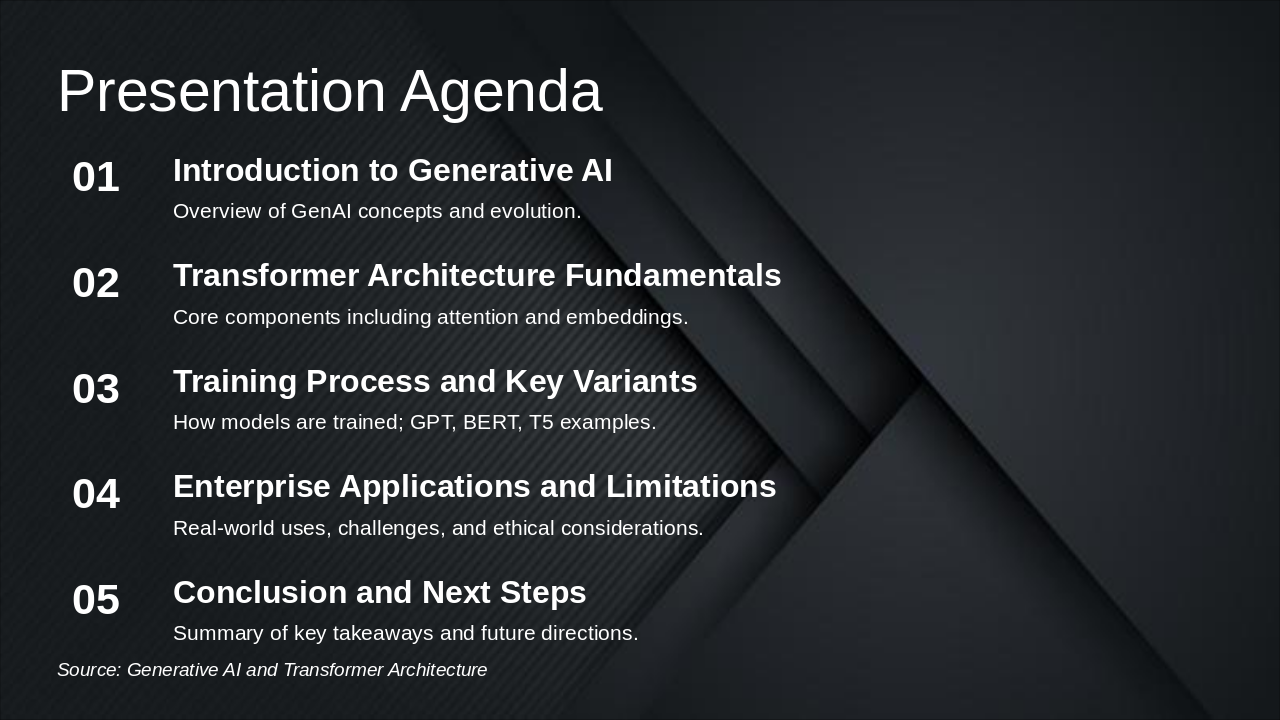

Slide 1 - Generative AI and Transformer Architecture

The slide's title is "Generative AI and Transformer Architecture," introducing a presentation on these topics. It includes the presenter's name, space for a company logo, and a subtitle indicating it's for internal training.

Generative AI and Transformer Architecture

Presenter: [Your Name]

[Company Logo Space]

Internal Training

Source: Internal Training Presentation